+++ title = ‘AIGC’ tags = [“AI”] draft = false +++

Image

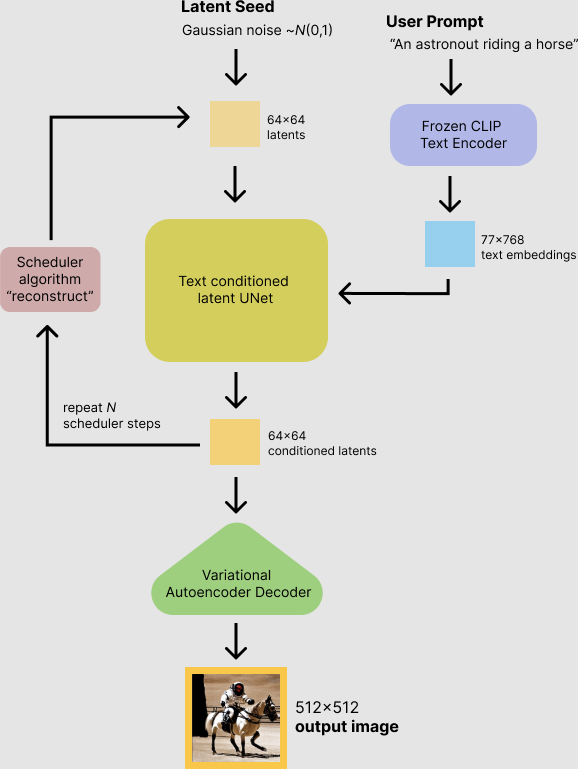

Stable Diffusion

text_encoder: Stable Diffusion usesCLIP, but other diffusion models may use other encoders such asBERTtokenizer: must match the one used by thetext_encodermodelscheduler: the scheduling algorithm used to progressively add noise to the image during trainingunet: the model used to generate the latent representation of the inputvae: autoencoder module that we’ll use to decode latent representations into real images

Tutorial

- Generative Modeling by Estimating Gradients of the Data Distribution

- The Annotated Diffusion Model

- Stable Diffusion with Diffusers

Install

conda create --name=ai python=3.10.9

sudo apt install nvidia-cuda-toolkit

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

pip install transformers

noglob pip3 install diffusers["torch"]

pip install -U xformers

Colab

Stable diffusion web-ui

References

- Stable Diffusion WebUI實用AI繪圖擴充功能:中文化、ControlNet

- macOS安裝Stable Diffusion WebUI,在自己的電腦跑AI繪圖

- Stable Diffusion提示詞寫法教學,附範例

Torch version

pip install torch==1.12.1 torchvision==0.13.1

export CUDA_VISIBLE_DEVICES=-1

Command line arguments

--skip-torch-cuda-test

--upcast-sampling

--no-half-vae

--no-half

--use-cpu interrogate|all

--num_cpu_threads_per_process=6

--precision full

--nowebui

Models

- Anime

- Anything

- Counterfeit

- Dreamlike Diffusion

- Realistic

- Deliberate

- Realistic Vision

- LOFI

- ChilloutMix

- 2.5D

- Never Ending Dream

- Protogen

- Guofen3

Lora

- Ghibli Style

- Base model: Anything v4.5/AbyssOrangeMix2

- VAE: OrangeMixs

- Prompt: lora:ghibli_style_offset:1

- Anime Lineart

- Base model: Anything v4.5/AbyssOrangeMix2

- VAE: OrangeMixs

- Sampler: DPM++ 2M Karras - 20 steps - CFG: 7

- Prompt: lineart, monochrome, lora:animeoutlineV4_16:1

- Negative embedding: EasyNegative、badhandv4

- Colorwater

- Weight: 0.8~1

- CFG: 3 ~ 6

- Prompt: lora:try2:1

Sites

- original

- Github - diffusers

- Models

- Gallery

- Prompts

- Art styles

References

- 【翻译】Stable Diffusion prompt: a definitive guide

- https://stable-diffusion-art.com/tutorials/

- Venus

- Midjourney 学习导航

- Github - awesome-conditional-content-generation

Video

Github - stable-diffusion-videos

Voice

Preprocess

demucs

Install

pip install -U demucs

Usage

# only separate vocals

demucs --two-stems=vocals myfile.mp3

Ultimate Vocal Remover

audio-slicer

Install

pip install librosa

pip install soundfile

Usage

python slicer2.py audio [--out OUT] [--db_thresh DB_THRESH] [--min_length MIN_LENGTH] [--min_interval MIN_INTERVAL] [--hop_size HOP_SIZE] [--max_sil_kept MAX_SIL_KEPT]

TTS

Edge-TTS

Install

pip install edge-tts

Usage

edge-tts --text "Hello, world!" --write-media hello.mp3 --write-subtitles hello.vtt

# player required, `brew install mpv`

edge-playback --text "Hello, world!"

# list voices

edge-tts --list-voices

# play with voice

edge-playback --voice zh-CN-shaanxi-XiaoniNeural --text "你好,世界"

Bark

Coqui-TTS

Voice Conversion

SoVITS

- Soft-VC: Soft Speech Units for Improved Voice Conversion

- VITS: Conditional Variational Autoencoder with Adversarial Learning for End-to-End Text-to-Speech

- Sovits: Soft-VC + VITS

- so-vits-svc: Singing Voice Conversion based on SoftVC + vits

- GPT-SoVITS

RVC

Install env

conda install -c conda-forge gcc

conda install -c conda-forge gxx

conda install ffmpeg cmake

conda install pytorch==2.1.1 torchvision==0.16.1 torchaudio==2.1.1 pytorch-cuda=11.8 -c pytorch -c nvidia

Install requirements

pip install -r requirements.txt

Download models

cd tools

python download_models.py

Models

Tutorials

Other Projects

-

NaturalSpeech

Speech Recognition (Speech To Text)

SpeechRecognition

Whisper

Wake Word Listener

References

Rendering

3D

AI Clone

商用:

开源:

-

EMO(暂未开源)

Others

Problems

- stable-diffusion-webui - Lora cannot be loaded in API mode

- copy

modules.script_callbacks.before_ui_callback()intodef api_only()

- copy